The history of computing spans millennia, beginning with rudimentary tools designed to aid in counting and evolving into the powerful, interconnected systems that shape our modern world. This remarkable journey reflects the human desire to solve complex problems, improve efficiency, and expand knowledge through the automation of information processing.

1. Early Tools and Mechanical Devices

Long before the invention of electronic computers, ancient civilizations developed tools to assist with arithmetic. One of the earliest and most well-known devices was the abacus, believed to have been used as early as 2300 BCE in Mesopotamia and later refined in China, Greece, and Rome. The abacus enabled users to perform calculations by moving beads along rods, embodying the principle of place value.

In the 17th century, advances in mechanical computation began to take shape. John Napier introduced logarithms in 1614, simplifying complex calculations. He also invented “Napier’s Bones,” a manual calculator using rods. In 1623, Wilhelm Schickard developed the first known mechanical calculator, followed by Blaise Pascal’s Pascaline in 1642, which could add and subtract using a series of gears and wheels. Gottfried Wilhelm Leibniz, another pivotal figure, expanded on Pascal’s work in 1671 with the Stepped Reckoner, capable of multiplication and division.

2. The Birth of Programmability: Babbage and Lovelace

The 19th century marked a turning point in computing history with the work of Charles Babbage, often called the “father of the computer.” In the 1820s, Babbage designed the Difference Engine, a mechanical device intended to compute polynomial functions. Though never fully completed, it laid the groundwork for his more ambitious Analytical Engine, conceived in 1837.

The Analytical Engine was revolutionary, featuring components analogous to the modern CPU, memory, and input/output systems. It was designed to be programmable using punched cards, an idea borrowed from Jacquard looms used in textile manufacturing.

Ada Lovelace, a mathematician and collaborator of Babbage, is often credited as the world’s first computer programmer. Her notes on the Analytical Engine, written in 1843, included the first algorithm intended to be processed by a machine. Lovelace also envisioned the broader potential of computing beyond mathematics, speculating that computers could one day create music or graphics.

3. Tabulating Machines and the Rise of Business Computing

In the late 19th century, the need for efficient data processing grew, especially in business and government. In response, Herman Hollerith, an American statistician, invented the tabulating machine to process the 1890 U.S. Census. His device used punched cards to store and sort data, significantly reducing the time required to compile census results.

Hollerith’s innovation laid the foundation for modern data processing and led to the formation of the Tabulating Machine Company, which eventually became IBM (International Business Machines) in 1924.

4. The Electronic Age Begins: 1930s–1940s

The development of electronic computing began in earnest during the early 20th century, driven by advances in electricity, electronics, and mathematical logic. Several milestones emerged during this period:

- Alan Turing, a British mathematician, proposed the concept of a universal machine in 1936, now known as the Turing machine. His abstract model could simulate any computational process and provided the theoretical basis for digital computers.

- Konrad Zuse, a German engineer, built the Z3 in 1941, the first working programmable computer. It used telephone relays and binary arithmetic.

- During World War II, computing was accelerated by military needs. British efforts at Bletchley Park, led by Turing and others, produced Colossus (1943–44), an early electronic computer used to break German codes.

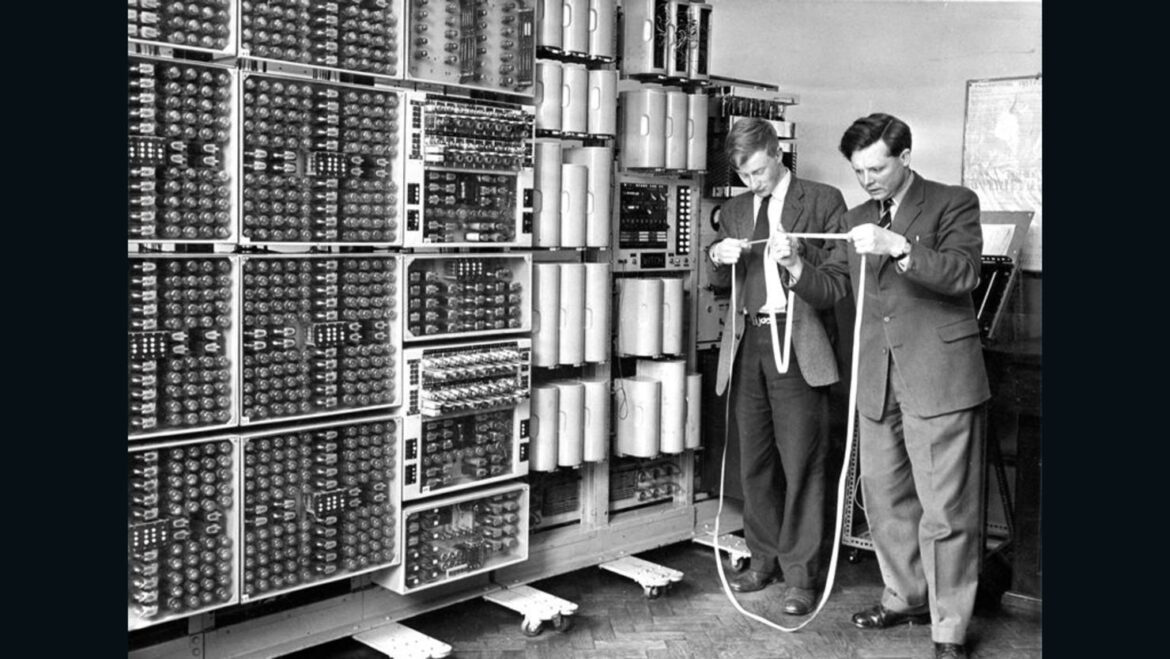

- In the U.S., Harvard Mark I (1944) and ENIAC (1946) were landmark projects. The Mark I was an electromechanical computer, while ENIAC (Electronic Numerical Integrator and Computer) was the first general-purpose, fully electronic digital computer.

5. The Stored-Program Concept and Early Computers

A crucial advance in computing came with the stored-program architecture, outlined in the EDVAC report by John von Neumann and others in 1945. This design allowed both data and program instructions to be stored in a computer’s memory, enabling far more flexibility.

Early machines based on this concept included:

- EDSAC (1949), built at the University of Cambridge.

- Manchester Baby (1948), the first computer to run a stored program.

- UNIVAC I (1951), the first commercially available computer in the U.S., built by J. Presper Eckert and John Mauchly.

6. Transistors and the First Generations of Computers

Computers evolved rapidly in the 1950s and 1960s. Initially, vacuum tubes were used to perform calculations, but these were large, inefficient, and prone to failure. The invention of the transistor at Bell Labs in 1947 (by Bardeen, Brattain, and Shockley) revolutionized electronics and computing.

Second-generation computers (late 1950s–early 1960s) used transistors instead of vacuum tubes. They were smaller, faster, and more reliable. This period also saw the rise of programming languages, such as FORTRAN (1957) and COBOL (1959), which made computers more accessible to scientists and businesses.

7. The Integrated Circuit and the Microprocessor Revolution

The development of the integrated circuit (IC) in the late 1950s by Jack Kilby and Robert Noyce enabled the miniaturization of electronics. This ushered in the third generation of computers in the 1960s, characterized by further reductions in size and cost and improvements in processing power.

The breakthrough moment came with the invention of the microprocessor in 1971. The Intel 4004, the first commercially available microprocessor, condensed the central processing unit onto a single chip. This innovation laid the groundwork for personal computing.

8. The Rise of Personal Computers (PCs)

The 1970s and 1980s witnessed the democratization of computing. No longer confined to governments or corporations, computers began to enter homes and schools.

Key milestones:

- 1975: The Altair 8800, often considered the first PC, sparked hobbyist interest. It used an Intel 8080 processor and was programmed using switches and LEDs.

- 1976: Apple Computer was founded by Steve Jobs and Steve Wozniak. Their Apple I and especially the Apple II (1977) were pivotal in popularizing home computing.

- 1981: IBM launched the IBM PC, a standard-setting personal computer that used Microsoft’s MS-DOS operating system. The PC’s architecture, with open specifications, led to widespread cloning and expansion of the market.

9. The Software Explosion and Graphical Interfaces

The development of more user-friendly interfaces propelled computing into the mainstream. Initially reliant on text-based commands, computers gradually adopted graphical user interfaces (GUIs):

- Xerox PARC pioneered the GUI in the 1970s, introducing the idea of windows, icons, and a mouse.

- Apple’s Macintosh (1984) brought GUI to a wider audience, influencing the design of future operating systems.

- Microsoft Windows, beginning in 1985 and achieving dominance with Windows 95, brought GUI-based computing to the mass market.

This period also saw an explosion in software development—from office productivity tools to educational and entertainment programs.

10. The Internet and the Information Age

Although the origins of the internet date back to the 1960s with ARPANET, the World Wide Web, invented by Tim Berners-Lee in 1989, made the internet accessible to the public. By the mid-1990s, the internet was transforming communication, commerce, and culture.

Email, search engines, social media, and e-commerce revolutionized how people interacted and accessed information. The internet became the backbone of global communication and economic infrastructure.

11. The Modern Era: Mobile Computing, AI, and the Cloud

The 21st century brought exponential growth in computing power, mobility, and connectivity:

- Smartphones, starting with the iPhone in 2007, put powerful computers in users’ pockets.

- Cloud computing enables data storage and processing over the internet, empowering everything from enterprise applications to streaming services.

- Artificial Intelligence (AI), once a theoretical pursuit, became a central force in computing. Machine learning, natural language processing, and robotics are redefining industries.

- Quantum computing, still in its early stages, promises to revolutionize computation by leveraging quantum mechanics for processing far beyond classical limitations.

Conclusion

The history of computing is a testament to human ingenuity, evolving from counting beads to quantum bits. It is a story of persistent innovation, visionaries, and transformative breakthroughs that have reshaped society. As we look to the future—with AI, quantum computing, and bioinformatics on the horizon—the journey continues, promising even more profound changes in how we compute, connect, and create.